model module¶

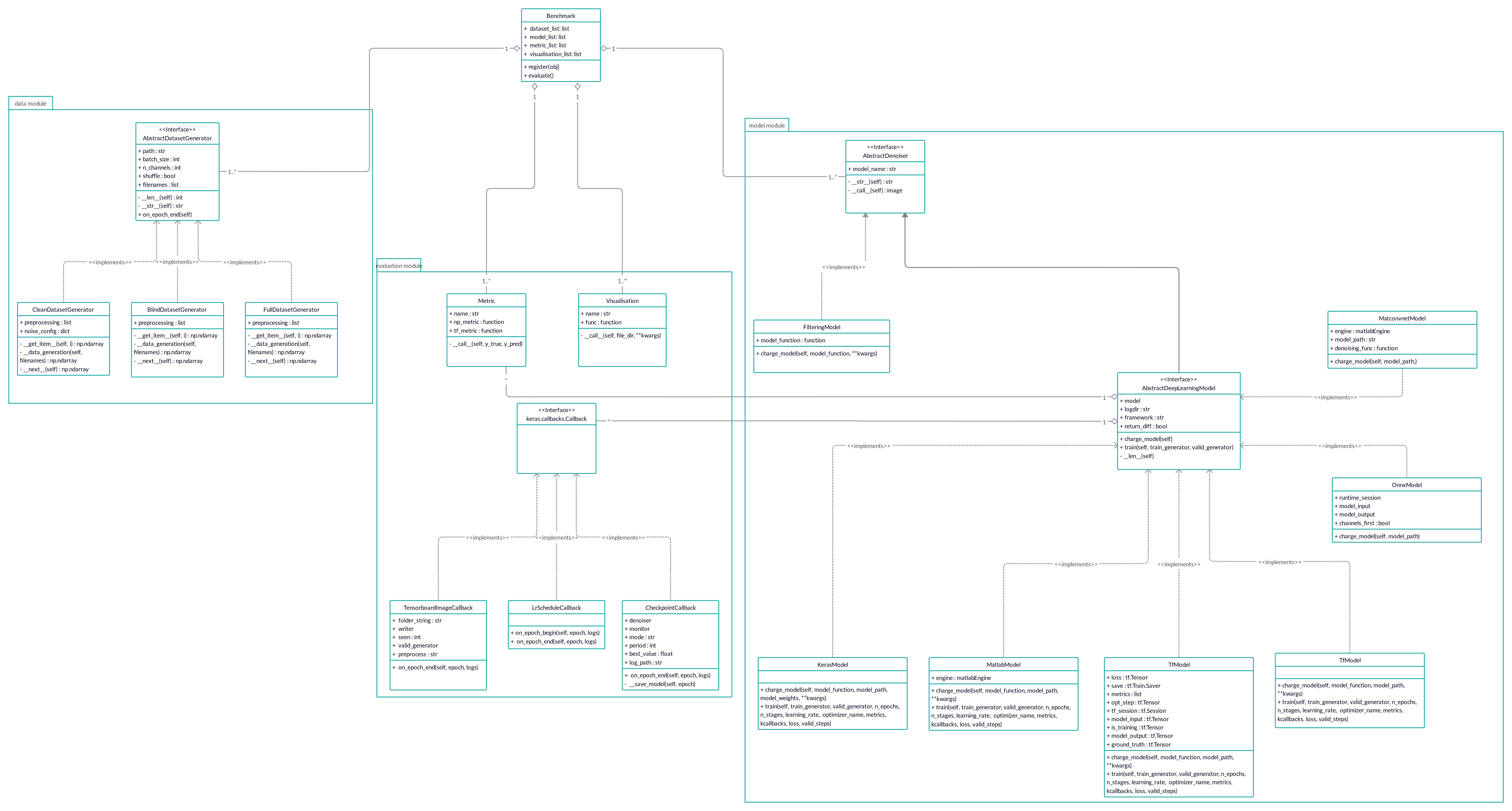

This module contains classes used for wrapping denoising algorithms in order to provide common functionality and behavior.

The frst distinction we make is between deep learning based denoising algorithms, and filtering algorithms. The functionality

of these two models is standardized by model.AbstractDenoiser.

Deep Learning models, however, are based on frameworks such as Keras and Pytorch which differ in syntax. To standardize the behavior of Deep Learning models across frameworks,

we propose the

model.AbstractDeepLearningModel interface, which unifies three kinds of functionalities that all deep learning models

should provide:

- charge_model, which builds the computational graph of the network.

- train, which trains the network on data.

- inference, which denoises data.

To give a better idea of the class structure and its relations to the other modules, we show the following UML class diagram,

The documentation of this module is divided as follows,

- Interface Classes: Documents the two main abstract classes which are AbstractDenoiser and AbstractDeepLearningModel.

- Wrapper Classes: Documents the 6 wrapper classes in the benchmark, which are FilteringModel, KerasModel, PytorchModel, TfModel, MatlabModel, OnnxModel, MatconvnetModel.

- Built-in Architectures: Documents the neural network architectures already provided by the benchmark.

- Filtering functions: Documents the filtering functions already provided by the benchmark.

- Model utilities: Documents functions for model conversion and graph editing.

Denoising Models¶

Interface Classes¶

-

class

model.AbstractDenoiser(model_name='DenoisingModel')[source]¶ Bases:

abc.ABCCommon interface for Denoiser classes. This class defines the basic functionalities that a Denoiser needs to have, such as __call__ function, which takes as input a noised image, and returns its reconstruction.

-

model_name¶ Model string identifier.

Type: string

-

__call__(self, image)[source]¶ Denoises a given image.

Parameters: image ( numpy.ndarray) – Batch of noisy images. Expected a 4D array with shape (batch_size, height, width, channels).

-

-

class

model.AbstractDeepLearningModel(model_name='DeepLearningModel', logdir='./training_logs/', framework=None, return_diff=False)[source]¶ Bases:

OpenDenoising.model.abstract_denoiser.AbstractDenoiserCommon interface for Deep Learning based image denoisers.

-

__call__(self, image)[source]¶ Denoises a given image.

Parameters: image ( numpy.ndarray) – Batch of noisy images. Expected a 4D array with shape (batch_size, height, width, channels).

-

__init__(self, model_name='DeepLearningModel', logdir='./training_logs/', framework=None, return_diff=False)[source]¶ Common interface for Deep Learning based image denoisers.

-

model¶ Object representing the Denoiser Model in the framework used.

-

logdir¶ String containing the path to the model log directory. Such directory will contain training information, as well as model checkpoints.

Type: str

-

train_info¶ Dictionary containing the time spent on training, how much parameters the network has, and if it has been trained.

Type: dict

-

-

Wrapper Classes¶

-

class

model.FilteringModel(model_name='FilteringModel')[source] Bases:

OpenDenoising.model.abstract_denoiser.AbstractDenoiserFilteringModel represents a Denoising Model that does not depend on Neural Networks.

-

model_function¶ Filtering denoising function. It should accept at least one argument, a image batch

numpy.ndarray. It should also have only one return, anothernumpy.ndarraywith same shape, corresponding to the denoising result.Type: function

-

__call__(self, image)[source] Denoises a batch of images noised_image

Parameters: image ( numpy.ndarray) – batch of images with shape (batch_size, height, width, channels)Returns: batch of images denoised by model_func, with shape (b, h ,w , c) Return type: numpy.ndarray

-

__init__(self, model_name='FilteringModel')[source] Initialize self. See help(type(self)) for accurate signature.

-

__repr__(self)[source] Return repr(self).

-

charge_model(self, model_function, **kwargs)[source] Charges the denoising function into the class wrapper.

Parameters: model_function ( function) – Filtering denoising function. It should accept at least one argument, a image batchnumpy.ndarray. It should also have only one return, anothernumpy.ndarraywith same shape, corresponding to the denoising result. Notice that, if your function needs more arguments than the noisy image batch, these can be passed through keyword arguments to charge_model (see examples section).

-

-

class

model.KerasModel(model_name='DeepLearningModel', logdir='./logs/Keras', return_diff=False)[source] Bases:

OpenDenoising.model.abstract_deep_learning_model.AbstractDeepLearningModelKerasModel wrapper class.

-

model¶ Denoiser Keras model used for training and inference.

Type: keras.models.Model

-

return_diff¶ If True, return the difference between predicted image, and image at inference time.

Type: bool

See also

model.AbstractDenoiser- for the basic functionalities of Image Denoisers.

model.AbstractDeepLearningModel- for the basic functionalities of Deep Learning based Denoisers.

-

__call__(self, image)[source] Denoises a batch of images.

Parameters: image ( numpy.ndarray) – 4D batch of noised images. It has shape: (batch_size, height, width, channels)Returns: Restored batch of images, with same shape as the input. Return type: numpy.ndarray

-

__init__(self, model_name='DeepLearningModel', logdir='./logs/Keras', return_diff=False)[source] Common interface for Deep Learning based image denoisers.

-

model Object representing the Denoiser Model in the framework used.

-

logdir¶ String containing the path to the model log directory. Such directory will contain training information, as well as model checkpoints.

Type: str

-

train_info¶ Dictionary containing the time spent on training, how much parameters the network has, and if it has been trained.

Type: dict

-

framework¶ String containing the name of the chosen framework (e.g. Keras, Tensorflow, Pytorch).

Type: str

-

return_diff If True, return the difference between predicted image, and image.

Type: bool

-

-

__len__(self)[source] Counts the number of parameters in the networks.

Returns: nparams – Number of parameters in the network. Return type: int

-

charge_model(self, model_function=None, model_path=None, model_weights=None, **kwargs)[source] Keras model charging function.

There are four main cases for “charge_model” function:

- Charge model architecture by using a function “model_function”.

- Charge model using .json file, previously saved from an existing architecture through the method keras.models.Model.to_json().

- Charge model using .yaml file, previously saved from an existing architecture through the method keras.models.Model.to_yaml()

- Charge model using .hdf5 file, previously saved from an existing architecture through the method keras.models.Model.save().

From these four cases, notice that only the last loads the model and the weights at the same time. Therefore, at the moment you are loading your model, you should consider specify “model_weights” so that this class can find and charge your model weights, which can be saved in both .h5 and .hdf5 formats.

If this is not the case, and your architecture has not been previously trained, you can run the training and then save the weights by using keras.models.Model.save_weights() method, or by using KerasModel.train(), method present on this class.

Parameters: Notes

If your building function accepts optional arguments, you can specify them by using kwargs.

Examples

Loading Keras DnCNN from class. Notice that in our implementation, depth is an optional argument.

>>> from OpenDenoising import model >>> mymodel = model.KerasModel(model_name="mymodel") >>> mymodel.charge_model(model_function=model.architectures.keras.dncnn, depth=17)

Loading Keras DnCNN from a .hdf5 file.

>>> from OpenDenoising import model >>> mymodel = model.PytorchModel(model_name="mymodel") >>> mymodel.charge_model(model_path=PATH)

Loading Keras DnCNN from a .json + .hdf5 file.

>>> from OpenDenoising import model >>> mymodel = model.PytorchModel(model_name="mymodel") >>> mymodel.charge_model(model_path=PATH_TO_JSON, model_weights=PATH_TO_HDF5)

-

train(self, train_generator, valid_generator=None, n_epochs=100.0, n_stages=500.0, learning_rate=0.001, optimizer_name=None, metrics=None, kcallbacks=None, loss=None, valid_steps=10, **kwargs)[source] Function to run the training of a Keras Model.

Notes

There are two cases where training should be launched:

- You only loaded your model architecture. In that case, this function will train your model from scratch using the dataset specified by train_generator and valid_generator.

- You loaded an architecture and weights for your model, but you want to reuse them. It may be the case where you want to run your training for a few more epochs, or even perform transfer learning.

Parameters: - train_generator (

data.AbstractDataset) – Train data generator. Notice that these generators should output paired image samples, the first, a noised version of the image, and the second, the ground-truth. - valid_generator (

data.AbstractDataset) – Validation data generator - n_epochs (int) – Number of epochs for which the training will be executed

- n_stages (int) – Number of batches of data are drawn per epoch.

- learning_rate (float) – Initial value for learning rate value for optimization

- optimizer_name (str) – Name of optimizer employed. Check Keras documentation for more information.

- metrics (list) – List of tensorflow functions implementing scalar metrics (see metrics in evaluation).

- kcallbacks (list) – List of keras callback instances. Consult Keras documentation and evaluation module for more information.

- loss (function) – Tensorflow-based loss function. It should take as input two Tensors and output a scalar Tensor holding the loss computation.

- valid_steps (int) – Number of batches drawn during evaluation.

-

-

class

model.PytorchModel(model_name='DeepLearningModel', logdir='./logs/Pytorch', return_diff=False)[source] Bases:

OpenDenoising.model.abstract_deep_learning_model.AbstractDeepLearningModelPytorch wrapper class.

See also

model.AbstractDenoiser- for the basic functionalities of Image Denoisers.

model.AbstractDeepLearningModel- for the basic functionalities of Deep Learning based Denoisers.

-

__call__(self, image)[source] Denoises a batch of images.

Parameters: image ( numpy.ndarray) – 4D batch of noised images. It has shape: (batch_size, height, width, channels)Returns: Restored batch of images, with same shape as the input. Return type: numpy.ndarray

-

__init__(self, model_name='DeepLearningModel', logdir='./logs/Pytorch', return_diff=False)[source] Common interface for Deep Learning based image denoisers.

-

model¶ Object representing the Denoiser Model in the framework used.

-

logdir¶ String containing the path to the model log directory. Such directory will contain training information, as well as model checkpoints.

Type: str

-

train_info¶ Dictionary containing the time spent on training, how much parameters the network has, and if it has been trained.

Type: dict

-

-

__len__(self)[source] Counts the number of parameters in the network.

Returns: nparams – Number of parameters in the network. Return type: int

-

charge_model(self, model_function=None, model_path=None, **kwargs)[source] Pytorch model charging function. You can charge a model either by specifying a class that implements the network architecture (passing it through model_function) or by specifying the path to a .pt or .pth file. If you class constructor accepts optional arguments, you can specify these by using Keyword arguments.

Parameters: - model_function (

torch.nn.Module) – Pytorch network Class implementing the network architecture. - model_path (str) – String containing the path to a .pt or .pth file.

Examples

Loading Pytorch DnCNN from class. Notice that in our implementation, depth is an optional argument.

>>> from OpenDenoising import model >>> mymodel = model.PytorchModel(model_name="mymodel") >>> mymodel.charge_model(model_function=model.architectures.pytorch.DnCNN, depth=17)

Loading Pytorch DnCNN from a file.

>>> from OpenDenoising import model >>> mymodel = model.PytorchModel(model_name="mymodel") >>> mymodel.charge_model(model_path=PATH)

- model_function (

-

train(self, train_generator, valid_generator=None, n_epochs=250, n_stages=500, learning_rate=0.001, metrics=None, optimizer_name=None, kcallbacks=None, loss=None, valid_steps=10)[source] Trains a Pytorch model.

Parameters: - train_generator (data.AbstractDataset) – dataset object inheriting from AbstractDataset class. It is a generator object that yields data from train dataset folder.

- valid_generator (data.AbstractDataset) – dataset object inheriting from AbstractDataset class. It is a generator object that yields data from valid dataset folder.

- n_epochs (int) – number of training epochs.

- n_stages (int) – number of batches seen per epoch.

- learning_rate (float) – constant multiplication constant for optimizer or initial value for training with dynamic learning rate (see callbacks)

- metrics (list) – List of metric functions. These functions should have two inputs, two instances of

numpy.ndarray. It outputs a float corresponding to the metric computed on those two arrays. For more information, take a look on the Benchmarking module. - optimizer_name (str) – Name of optimizer to use. Check Pytorch documentation for a complete list.

- kcallbacks (list) – List of custom_callbacks.

- loss (

torch.nn.modules.loss) – Pytorch loss function. - valid_steps (int) – If valid_generator was specified, valid_steps determines the number of valid batches that will be seen per validation run.

-

class

model.TfModel(model_name='DeepLearningModel', logdir='./logs/Tensorflow', return_diff=False)[source] Bases:

OpenDenoising.model.abstract_deep_learning_model.AbstractDeepLearningModelTensorflow model class wrapper.

Parameters: - loss (

tf.Tensor) – Tensor holding the computation for the loss function. - saver (

tf.train.Saver) – Object for saving the model at each epoch iteration. - metrics (list) – List of

tf.Tensorobjects holding the computation for each metric. - opt_step (

tf.Operation) – Tensorflow operation corresponding to the update performed on model’s variables. - tf_session (

tf.Session) – Instance of tensorflow session. - model_input (

tf.Tensor) – Tensor corresponding to model’s input. - is_training (

tf.Tensor) – If batch normalization is used, corresponds to a tf placeholder controlling training and inference phases of batch normalization. - model_output (

tf.Tensor) – Tensor corresponding to model’s output. - ground_truth (

tf.placeholder) – Placeholder corresponding to original training images (clean).

See also

model.AbstractDenoiser- for the basic functionalities of Image Denoisers.

model.AbstractDeepLearningModel- for the basic functionalities of Deep Learning based Denoisers.

-

__call__(self, image)[source] Denoises a batch of images.

Parameters: image ( np.ndarray) – 4D batch of noised images. It has shape: (batch_size, height, width, channels)Returns: Restored batch of images, with same shape as the input. Return type: np.ndarray

-

__init__(self, model_name='DeepLearningModel', logdir='./logs/Tensorflow', return_diff=False)[source] Common interface for Deep Learning based image denoisers.

-

model¶ Object representing the Denoiser Model in the framework used.

-

logdir¶ String containing the path to the model log directory. Such directory will contain training information, as well as model checkpoints.

Type: str

-

train_info¶ Dictionary containing the time spent on training, how much parameters the network has, and if it has been trained.

Type: dict

-

-

__len__(self)[source] Counts the number of parameters in the networks.

Returns: nparams – Number of parameters in the network. Return type: int

-

charge_model(self, model_function=None, model_path=None, **kwargs)[source] Charges Tensorflow model into the wrapper class by using a model file, or a building architecture function.

Parameters: - model_function (

function) – Building architecture function, which returns at least two tensors: one for the graph input, and another for the graph output. - model_path (str) –

String containing the path to a .pb or .meta file, holding the computational graph for the model. Note that each of these files correspond to a different tensorflow saving API.

- .pb files correspond to saved_model API. This API saves the model in a folder containing the .pb file, along with a folder called “variables”, holding the weight values.

- .meta files correspond to tf.train API. This API saves the model through four files (.meta, .index, .data and checkpoint).

You can save your models in one of these two formats.

Notes

This function accepts Keyword arguments which can be used to pass additional parameters to model_function.

Examples

Loading Tensorflow DnCNN from class. Notice that in our implementation, depth is an optional argument.

>>> from OpenDenoising import model >>> mymodel = model.TfModel(model_name="mymodel") >>> mymodel.charge_model(model_function=model.architectures.tensorflow.DnCNN, depth=17)

Loading Tensorflow DnCNN from a file. Note that the file which you are going to charge on TfModel need to be a .pb or a .meta file. In the first case, we assume you have used the SavedModel API, while in the second case, we assume the Checkpoint API.

>>> from OpenDenoising import model >>> mymodel = model.PytorchModel(model_name="mymodel") >>> mymodel.charge_model(model_path=PATH_TO_PB_OR_META)

- model_function (

-

train(self, train_generator, valid_generator=None, n_epochs=250, n_stages=500, learning_rate=0.001, metrics=None, optimizer_name=None, kcallbacks=None, loss=None, valid_steps=10, saving_api='SavedModel')[source] Trains a tensorflow model.

Parameters: - train_generator (data.AbstractDataset) – Dataset object inheriting from AbstractDataset class. It is a generator object that yields data from train dataset folder.

- valid_generator (data.AbstractDataset) – Dataset object inheriting from AbstractDataset class. It is a generator object that yields data from valid dataset folder.

- n_epochs (int) – Number of training epochs.

- n_stages (int) – Number of batches seen per epoch.

- learning_rate (float) – Constant multiplication constant for optimizer or initial value for training with dynamic learning rate (see callbacks)

- metrics (list) – List of tensorflow functions implementing scalar metrics (see metrics in evaluation).

- optimizer_name (str) – Name of optimizer to use. Check tf.train documentation for a complete list.

- kcallbacks (list) – List of callbacks.

- loss (

function) – Tensorflow-based loss function. It should take as input two Tensors and output a scalar Tensor holding the loss computation. - valid_steps (int) – If valid_generator was specified, valid_steps determines the number of valid batches that will be seen per validation run.

- saving_api (string) – If training_api = “tftrain”, uses tf.train.Saver as the model saver. Otherwise, uses saved_model API.

- loss (

-

class

model.MatlabModel(model_name='MatlabModel', logdir='./logs/Matlab', return_diff=False)[source] Bases:

OpenDenoising.model.abstract_deep_learning_model.AbstractDeepLearningModelMatlab Deep Learning toolbox wrapper class.

Notes

To use this class you need a Matlab license with access to the Deep Learning toolbox.

See also

model.AbstractDenoiser- for the basic functionalities of Image Denoisers.

model.AbstractDeepLearningModel- for the basic functionalities of Deep Learning based Denoisers.

-

__call__(self, image)[source] Denoises a batch of images.

Notes

To perform inference on MatlabModels, you need to have a variable on Matlab’s workspace called ‘net’. This variable is the output of a training session, or the result of a load(‘obj’).

Parameters: image ( numpy.ndarray) – 4D batch of noised images. It has shape: (batch_size, height, width, channels)Returns: Restored batch of images, with same shape as the input. Return type: numpy.ndarray

-

__init__(self, model_name='MatlabModel', logdir='./logs/Matlab', return_diff=False)[source] Common interface for Deep Learning based image denoisers.

-

model¶ Object representing the Denoiser Model in the framework used.

-

logdir String containing the path to the model log directory. Such directory will contain training information, as well as model checkpoints.

Type: str

-

train_info¶ Dictionary containing the time spent on training, how much parameters the network has, and if it has been trained.

Type: dict

-

-

__len__(self)[source] Counts the number of parameters in the networks.

Returns: nparams – Number of parameters in the network. Return type: int

-

charge_model(self, model_function=None, model_path=None, **kwargs)[source] MatlabModel model charging functions

This function works by using Matlab’s Python engine to make Matlab internal calls. You can either specify the path to a Matlab function that will build the model, or to a .mat file holding the pretrained model.

Notes

You may specify optional arguments to the model building function through keyword arguments.

Parameters:

-

train(self, train_generator, valid_generator=None, n_epochs=250, n_stages=500, learning_rate=0.001, optimizer_name='adam', valid_steps=10)[source] Trains a Matlab denoiser model.

Notes

Instead of using Clean/Full/Blind dataset Python classes, you should use the class MatlabDatasetWrapper, which exports a dataset to matlab workspace.

Parameters: - train_generator (str) – Name of the matlab imageDatastore variable (for instance, if you have train_imds in your workspace you should pass ‘train_imds’ for train_generator).

- valid_generator (str) – Name of the matlab imageDatastore variable (for instance, if you have train_imds in your workspace you should pass ‘valid_imds’ for valid_generator).

- n_epochs (int) – Number of training epochs.

- n_stages (int) – Number of image batches are drawn during a training epoch.

- learning_rate (float) – Initial value for learning rate value for optimization.

- optimizer_name (str) – One among {‘sgdm’, ‘adam’, ‘rmsprop’}

See also

data.MatlabDatasetWrapper- for the class providing data to train such kinds of models.

-

class

model.OnnxModel(model_name='DeepLearningModel', return_diff=False)[source] Bases:

OpenDenoising.model.abstract_deep_learning_model.AbstractDeepLearningModelOnnx models class wrapper. Note that Onnx models only support inference, so training is unavailable.

-

runtime_session¶ onnxruntime session to run inference.

Type: onnxruntime.capi.session.InferenceSession

-

model_input¶ onnxruntime input tensor

Type: onnxruntime.capi.onnxruntime_pybind11_state.NodeArg

-

model_output¶ onnxruntime output tensor

Type: onnxruntime.capi.onnxruntime_pybind11_state.NodeArg

See also

model.AbstractDenoiser- for the basic functionalities of Image Denoisers.

model.AbstractDeepLearningModel- for the basic functionalities of Deep Learning based Denoisers.

-

__call__(self, image)[source] Denoises a batch of images.

Parameters: image ( numpy.ndarray) – 4D batch of noised images. It has shape: (batch_size, height, width, channels)Returns: Restored batch of images, with same shape as the input. Return type: numpy.ndarray

-

__init__(self, model_name='DeepLearningModel', return_diff=False)[source] Common interface for Deep Learning based image denoisers.

-

model¶ Object representing the Denoiser Model in the framework used.

-

logdir¶ String containing the path to the model log directory. Such directory will contain training information, as well as model checkpoints.

Type: str

-

train_info¶ Dictionary containing the time spent on training, how much parameters the network has, and if it has been trained.

Type: dict

-

-

__len__(self)[source] Counts the number of parameters in the networks.

Returns: nparams – Number of parameters in the network. Return type: int

-

charge_model(self, model_path=None)[source] This method charges a onnx model into the class wrapper. It uses onnx module to load the model graph from a .onnx file, then creates a runtime session from onnxruntime module.

Parameters: model_path (str) – String containing the path to the .onnx model file.

-

-

class

model.MatconvnetModel(model_name='MatconvnetModel', return_diff=False)[source] Bases:

OpenDenoising.model.abstract_deep_learning_model.AbstractDeepLearningModelMatlab Matconvnet wrapper class.

Notes

Matconvnet models are available only for inference.

-

denoising_func¶ Function object representing the Matlab function responsable for denoising images.

Type: function

See also

model.AbstractDenoiser- for the basic functionalities of Image Denoisers.

model.AbstractDeepLearningModel- for the basic functionalities of Deep Learning based Denoisers.

-

__call__(self, image)[source] Denoises a batch of images.

Parameters: image ( numpy.ndarray) – 4D batch of noised images. It has shape: (batch_size, height, width, channels)Returns: Restored batch of images, with same shape as the input. Return type: numpy.ndarray

-

__init__(self, model_name='MatconvnetModel', return_diff=False)[source] Common interface for Deep Learning based image denoisers.

-

model¶ Object representing the Denoiser Model in the framework used.

-

logdir¶ String containing the path to the model log directory. Such directory will contain training information, as well as model checkpoints.

Type: str

-

train_info¶ Dictionary containing the time spent on training, how much parameters the network has, and if it has been trained.

Type: dict

-

-

Built-in Architectures¶

Keras Architectures¶

-

model.architectures.keras.dncnn(depth=17, n_filters=64, kernel_size=(3, 3), n_channels=1, channels_first=False)[source]¶ Keras implementation of DnCNN. Implementation followed the original paper [1]. Authors original code can be found on their Github Page.

Parameters: - depth (int) – Number of fully convolutional layers in dncnn. In the original paper, the authors have used depth=17 for non- blind denoising and depth=20 for blind denoising.

- n_filters (int) – Number of filters on each convolutional layer.

- kernel_size (int tuple) – 2D Tuple specifying the size of the kernel window used to compute activations.

- n_channels (int) – Number of image channels that the network processes (1 for grayscale, 3 for RGB)

- channels_first (bool) – Whether channels comes first (NCHW, True) or last (NHWC, False)

Returns: Keras model object representing the Neural Network.

Return type: keras.models.ModelReferences

[1] Zhang K, Zuo W, Chen Y, Meng D, Zhang L. Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising. IEEE Transactions on Image Processing. 2017 Examples

>>> from OpenDenoising.model.architectures.keras import dncnn >>> dncnn_s = dncnn(depth=17) >>> dncnn_b = dncnn(depth=20)

-

model.architectures.keras.rednet(depth=20, n_filters=128, kernel_size=(3, 3), skip_step=2, n_channels=1)[source]¶ Keras implementation of RedNet. Implementation following the paper [1].

Notes

In [1], authors have suggested three architectures:

- RED10, which has 10 layers and does not use any skip connection (hence skip_step = 0)

- RED20, which has 20 layers and uses skip_step = 2

- RED30, which has 30 layers and uses skip_step = 2

Moreover, the default number of filters is 128, while the kernel size is (3, 3).

Parameters: - depth (int) – Number of fully convolutional layers in dncnn. In the original paper, the authors have used depth=17 for non-blind denoising and depth=20 for blind denoising.

- n_filters (int) – Number of filters at each convolutional layer.

- kernel_size (list) – 2D Tuple specifying the size of the kernel window used to compute activations.

- skip_step (int) – Step for connecting encoder layers with decoder layers through add. For skip_step=2, at each 2 layers, the j-th encoder layer E_j is connected with the i = (depth - j) th decoder layer D_i.

- n_channels (int) – Number of image channels that the network processes (1 for grayscale, 3 for RGB)

Returns: Keras Model representing the Denoiser neural network

Return type: keras.models.ModelReferences

[1] (1, 2) Mao, X., Shen, C., & Yang, Y. B. (2016). Image restoration using very deep convolutional encoder-decoder networks with symmetric skip connections. In Advances in neural information processing systems

Tensorflow Architectures¶

-

model.architectures.tensorflow.dncnn(depth=17, n_filters=64, kernel_size=3, n_channels=1, channels_first=False)[source]¶ Tensorflow implementation of DnCNN. Implementation followed the original paper [1]. Authors original code can be found on their Github Page.

Notes

Implementation was based on the following Github page.

Parameters: - depth (int) – Number of fully convolutional layers in dncnn. In the original paper, the authors have used depth=17 for non- blind denoising and depth=20 for blind denoising.

- n_filters (int) – Number of filters on each convolutional layer.

- kernel_size (int tuple) – 2D Tuple specifying the size of the kernel window used to compute activations.

- n_channels (int) – Number of image channels that the network processes (1 for grayscale, 3 for RGB)

- channels_first (bool) – Whether channels comes first (NCHW, True) or last (NHWC, False)

Returns: - input_tensor (

tf.Tensor) – Network graph input tensor - is_training (

tf.Tensor) – Placeholder indicating if the network is being trained or evaluated - output_tensor (

tf.Tensor) – Network graph output tensor

References

[1] Zhang K, Zuo W, Chen Y, Meng D, Zhang L. Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising. IEEE Transactions on Image Processing. 2017 Examples

>>> from OpenDenoising.model.architectures.tensorflow import dncnn >>> (dncnn_s_input, dncnn_s_is_training, dncnn_s_output) = dncnn(depth=17) >>> (dncnn_b_input, dncnn_b_is_training, dncnn_b_output) = dncnn(depth=20)

Pytorch Architectures¶

-

class

model.architectures.pytorch.DnCNN(depth=17, n_filters=64, kernel_size=3, n_channels=1)[source]¶ -

__init__(self, depth=17, n_filters=64, kernel_size=3, n_channels=1)[source]¶ Pytorch implementation of DnCNN. Implementation followed the original paper [1]. Authors original code can be found on their Github Page.

Notes

This implementation is based on the following Github page.

Parameters: - depth (int) – Number of fully convolutional layers in dncnn. In the original paper, the authors have used depth=17 for non- blind denoising and depth=20 for blind denoising.

- n_filters (int) – Number of filters on each convolutional layer.

- kernel_size (int tuple) – 2D Tuple specifying the size of the kernel window used to compute activations.

- n_channels (int) – Number of image channels that the network processes (1 for grayscale, 3 for RGB)

References

[1] Zhang K, Zuo W, Chen Y, Meng D, Zhang L. Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising. IEEE Transactions on Image Processing. 2017 Example

>>> from OpenDenoising.model.architectures.pytorch import DnCNN >>> dncnn_s = DnCNN(depth=17) >>> dncnn_b = DnCNN(depth=20)

-

forward(self, x)[source]¶ Defines the computation performed at every call.

Should be overridden by all subclasses.

Note

Although the recipe for forward pass needs to be defined within this function, one should call the

Moduleinstance afterwards instead of this since the former takes care of running the registered hooks while the latter silently ignores them.

-

Model utilities¶

-

model.utils.pb2onnx(path_to_pb, path_to_onnx, input_node_names=['input'], output_node_names=['output'])[source]¶ Converts Tensorflow’s ProtoBuf file to Onnx model.

Parameters: - path_to_pb (str) – String containing the path to the .pb file containing the tensorflow graph to convert to onnx.

- path_to_onnx (str) – String containing the path to the location where the .onnx file will be saved.

- input_node_names (list) – List of strings containing the input node names

- output_node_names (list) – List of strings containing the output node names

-

model.utils.freeze_tf_graph(model_file=None, output_filepath='./output_graph.pb', output_node_names=None)[source]¶ Freezes tensorflow graph, then writes it in a new .pb file.

Parameters: